Mitchell Hashimoto on the AI-Assisted Future of Open Source

What the Ghostty creator learned reviewing hundreds of AI-generated pull requests, and why he thinks Git might need a successor

Mitchell Hashimoto wakes up early. Before his toddler stirs, before breakfast, he opens his laptop and checks what landed in the Ghostty repository overnight. Ghostty, a GPU-accelerated terminal emulator, has become one of the most active open source projects in its category. Most mornings, Hashimoto sends new GitHub issues to an AI agent for a first pass. The hit rate hovers around 10 to 20 percent. It is not perfect, but it helps him triage and spot patterns faster than he could alone.

It is a small ritual with larger meaning. Hashimoto is not fighting the rise of AI in open source. He is studying it, learning where it helps, and drawing careful lines around where it does not.

A new rule for a new era

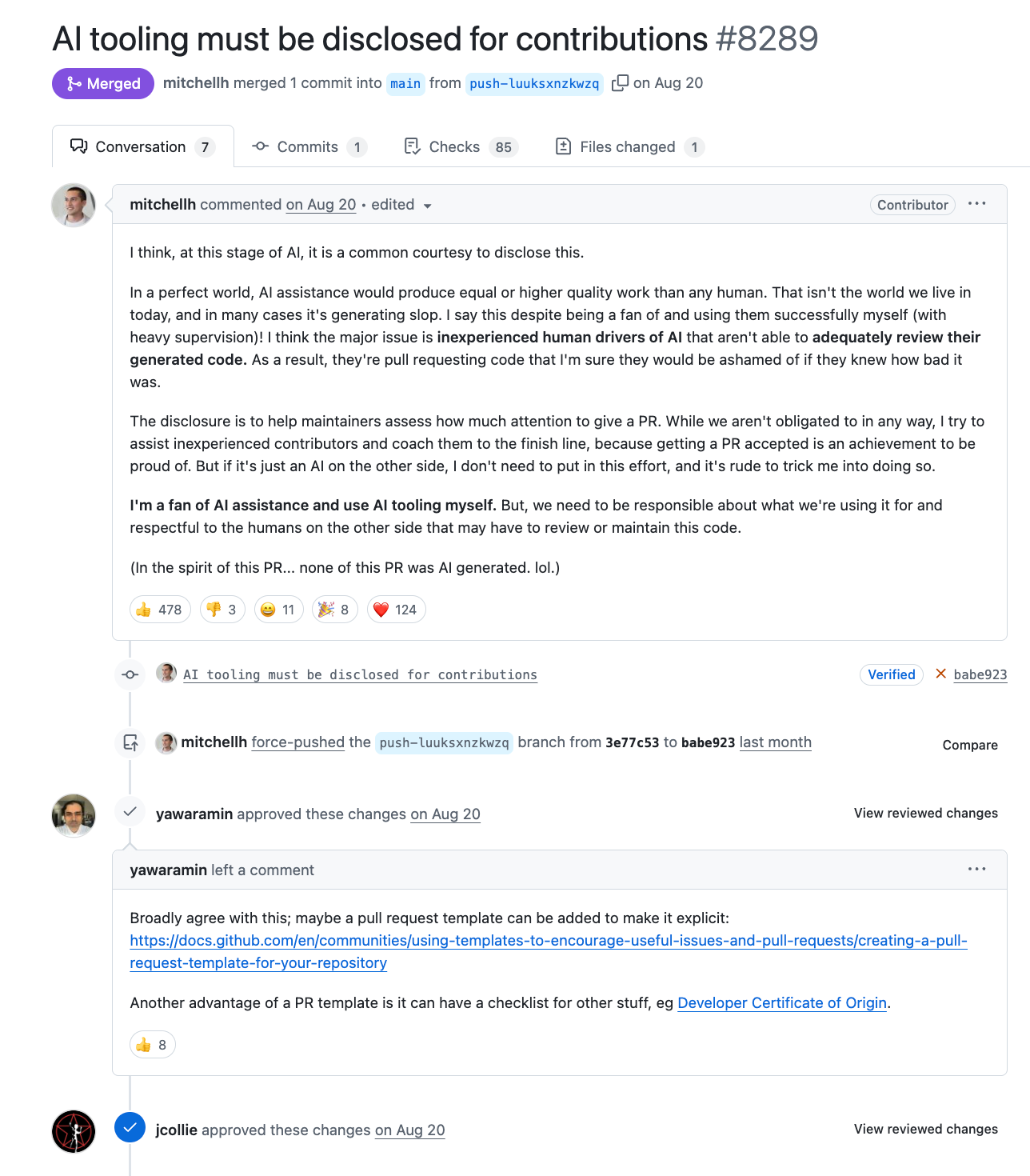

Earlier this year, Mitchell Hashimoto introduced a new requirement to the Ghostty’s contributor guide: every pull request must disclose when AI tools were used. The idea was practical. Too many submissions looked fine on the surface but fell apart under review. He could tell that some contributors had pasted AI code they did not fully understand.

“Before AI, I might get one bad PR every six months,” he said. “Now it feels like every other week.”

The change was not about policing people. It was about context for reviewers who put their time and attention into a review. In the same pull request, he wrote, “The disclosure is to help maintainers assess how much attention to give a PR. While we aren’t obligated to in any way, I try to assist inexperienced contributors and coach them to the finish line. But if it’s just an AI on the other side, I don’t need to put in that effort.”

Behind the policy was a deeper frustration. He had seen an explosion of what he calls “AI slop” - code that looked plausible but lacked understanding. “There’s good-intention slop and bad-intention slop,” he said in his talk with us at SpecStory. “The difference is whether the person tried to understand what they were doing.”

That distinction matters. Hashimoto recalled a contributor who left the community after receiving harsh feedback on a low-quality PR. “Stuff like that makes me feel bad because I think it wouldn’t have happened ten years ago,” he said. “Back then, I would have been more patient. But now that bad contributions happen so often, I lose my patience immediately.”

Within weeks of the rule taking effect, about fifty percent of all Ghostty pull requests included an AI disclosure. The numbers confirmed what he suspected: AI had become a routine part of contribution. The question was no longer if it should be used, but how.

For Maintainers: Disclosure is essential, but it is only the first step. The challenge is helping good-faith contributors without drowning in noise.

For Contributors: Show your work. Help reviewers see your thinking, not just your diff.

Since that initial change, Hashimoto has continued to shape Ghostty’s culture around transparency. In his blog post Vibing a Non-Trivial Ghostty Feature, he described how he uses tools like Amp to design and implement complex features, documenting the messy and experimental steps that most developers hide. The post captured the same philosophy that drives Ghostty’s contributor policy: AI can be part of the process, but contributors should show how it influenced their work so that reviewers can understand the intent behind each change.

Seeing the intent behind the code

What frustrates Hashimoto is not that people use AI, but the absence of visible reasoning.

“As a reviewer, I do not care what the AI said. The AI output is noise. I want to see the contributor’s thinking.”

When he opens a PR, he looks for signs of understanding: the prompts, the decisions, the corrections along the way. Bad PRs, he said, tend to show a “slop zone” where the contributor is clearly fishing for code without comprehension. “I want to see that you understand what you’re doing, or at least trying to.”

Well-structured AI sessions, by contrast, build trust. They reveal thought process and intention. For Hashimoto, that trail of reasoning is what separates a responsible contributor from a careless one. The checkbox saying “AI used” is not enough. What matters is the visible proof that a human actually thought through the work.

For Contributors: Don’t just say you used AI. Show how you used it. Your prompts tell the story of your thinking.

Learning to drive with AI

Hashimoto is not only a maintainer, reviewing AI-assisted work. He uses AI daily and experiments with different workflows. For planning and exploration, he often runs multiple coding agents side by side, comparing their results. “Usually no one agent wins,” he said. “One captures the main idea. Another remembers an edge case. I pull the best parts together by hand.”

This ensemble approach costs more time and compute, but it helps him triangulate solutions. It also highlights a broader truth: AI is not a single oracle, but a chorus of imperfect advisors.

When it comes to implementation, he enforces one rule on himself. “If the AI writes something I do not understand, I stop and study it,” he said. “I try to recreate it myself. If I cannot reach the same diff, I need to learn more.”

Despite being an experienced systems programmer, he calls himself “a very inexperienced JavaScript front-end person.” When the AI generates front-end code, he reads through it line by line to understand the imports, data flow, and dependencies before committing anything.

He treats AI not as an answer machine but as a learning accelerator. It lets him build something real first, then study it deeply enough to make it his own.

For Contributors: Never commit code you cannot explain. AI should help you learn faster, not think less.

Better scaffolding for contributors

Rather than hope contributors discover effective workflows by chance, Hashimoto has started shipping small helper tools directly in the Ghostty repository.

One of his favourites is a custom Amp slash command he built that fetches GitHub issues and reformats them into clean Markdown for AI planning sessions. “I use it five times a day,” he said. Commands like this give contributors a clean starting point for structured reasoning before they even begin generating code.

Hashimoto draws a line between tooling that guides good habits and tools that just generate noise. “The engagement-bait trend of massive prompt libraries,” he said, “that’s just noise.”

Scaffolding, for him, is not about automation but about making good behavior easy and visible. The right structure helps contributors think more clearly and makes their reasoning visible to others. When the repository itself encodes these habits, people do not just read the guidelines, they practice them with every contribution.

For Maintainers: Don’t just write contribution rules or guidelines. Build and ship the actual tools that embody your project’s best practices for AI-assisted contribution.

The next generation of developer tooling

Prompt blame: tracing code back to intent

Hashimoto’s vision for better tooling starts with accountability. He envisions a future system where version control can tell you not just who wrote a line of code, but why.

“It would be useful to point at a line of code and say what part generated this,” he said.

He calls one idea “prompt blame,” a nod to git blame. Instead of only showing who wrote a line, it would show which prompt created it and where the human stepped in. That kind of trace and visibility answers a familiar review question. Why did this change happen in the first place?

“Current AI tools show all the machine diffs but hide the human ones,” he said. “You lose the part where the human is actually thinking.”

Annotating AI sessions: the human commentary layer

For Hashimoto, visibility is not just about machine behavior but also human intent. He advocates for annotation, giving humans a way to mark parts of an AI session with notes such as: “This part was experimental. This part was me learning. This part actually informed the final PR.” It’s the difference between showing just your final answer and showing your thought process.. These annotations exist for the next human who reads the code.

Interleaving human and AI edits

The other gap he points to is temporal. Most coding tools show the AI’s diffs but not the developer’s.

“It shows all the AI diffs but doesn’t show the human diffs,” he said. “You lose the part where the human is actually thinking.”

Hashimoto imagines a unified history that interleaves both, where every refactor, correction, or course change by a person sits alongside the AI’s suggestions. Code review would then show a full collaboration, not a one-sided transcript.

The opening beyond Git

Experience with AI-assisted reviews has pushed Hashimoto toward a larger conclusion. Traditional version control was not designed for rapid human and machine collaboration.

“If someone wanted to invent a new version control system, this is the biggest opening since Git,” he said.

Git tracks authors and commits. It does not track the reasoning or conversations that produced them.

Git was built for snapshots, not for continuous, conversational iteration between humans and machines. Hashimoto’s advice to the team behind Jujutsu, where he serves as an advisor, reflects this thinking: make it agent-friendly. In his words, a modern system should “over-aggressively create commits”, capturing every micro-diff so humans and agents can safely experiment, revert, and compare ideas.

The real opportunity, he says, lies in metadata. “How do you attach the prompts? How do you attach co-authorship?” Git was not built to track the dialogue that produces a change. A new system could.

Intent: version control for the AI era

That question: how to attach prompts, reasoning, and co-authorship to real code history is exactly what SpecStory’s upcoming project Intent is designed to solve.

Intent is a new version control system built from the ground up for AI-assisted development. It automatically captures every AI conversation, links it to code changes, and creates a searchable decision timeline. Built on CRDTs (Conflict-free Replicated Data Types), it synchronizes work continuously without merge conflicts.

Where Git relies on human discipline to document changes, Intent captures context automatically. Imagine asking months later, “Why did we choose JWT instead of sessions?” and seeing the full conversation that led there: the alternatives discussed, the reasoning behind the choice, and the exact lines of code that came from it.

Key capabilities include:

Automatic versioning: Every save becomes a versioned checkpoint.

AI conversation tracking: Links code diffs to their originating chat sessions.

Conversational search: Queries like “When did we add authentication?” return the full decision trail.

CRDT-based sync: Real-time collaboration with no merge conflicts.

Human-in-the-loop history: Interleaves AI-generated and human edits into one continuous timeline.

In many ways, Intent turns Hashimoto’s wishlist: prompt blame, session annotation, human-AI interleaving into infrastructure. It makes reasoning first-class, so context never gets lost.

For Maintainers: Version control should capture not just the code, but the conversations that shaped it.

For Contributors: The best contribution is one that shows how you thought not just what you typed.

The future of transparent collaboration

After hundreds of AI-assisted reviews, Hashimoto’s view is clear. Open source does not have an AI problem. It has a transparency problem. The goal is not to forbid AI. The goal is to make the human judgment in the loop visible.

He imagines a norm where sharing AI sessions is as common as sharing diffs, where maintainers can check not only what changed but why, and where contributors can demonstrate the care behind their work. “If people shared their sessions, it would help a lot,” he said. “I would have loved to see how my open source heroes actually built a feature.”

Culture and tooling will meet in the middle. Disclosure sets expectations. Reasoning trails and richer metadata make those expectations practical. That is the direction SpecStory is betting on. When the thinking travels with the code, teams move faster, communities stay healthier, and code review becomes a conversation grounded in intent rather than guesswork.